Table of Contents

Toggle🧩 1. Introduction: The Next Leap in AI Hardware

Artificial Intelligence has evolved at lightning speed — from software-based deep learning to specialized hardware like GPUs and TPUs.

But now, a new generation of computing is emerging that goes beyond conventional digital logic — Neuromorphic Chips.

These chips mimic how the human brain processes information — using neurons, synapses, and spikes — to achieve unprecedented efficiency and adaptability.

Imagine a chip that learns and adapts in real time, consumes minimal power, and performs complex cognitive tasks like pattern recognition, motion prediction, and decision-making — all at the edge, without cloud dependency.

This is neuromorphic computing, and it’s set to reshape the future of AI across industries — from robotics to healthcare to autonomous systems.

🧠 2. What Is Neuromorphic Computing?

🧬 Definition

Neuromorphic computing refers to a hardware and software design approach that imitates the architecture and functioning of the human brain.

Instead of binary logic (0 and 1), neuromorphic systems rely on spiking neurons that transmit information as electrical pulses (spikes) — just like biological neurons.

🧩 Core Concept

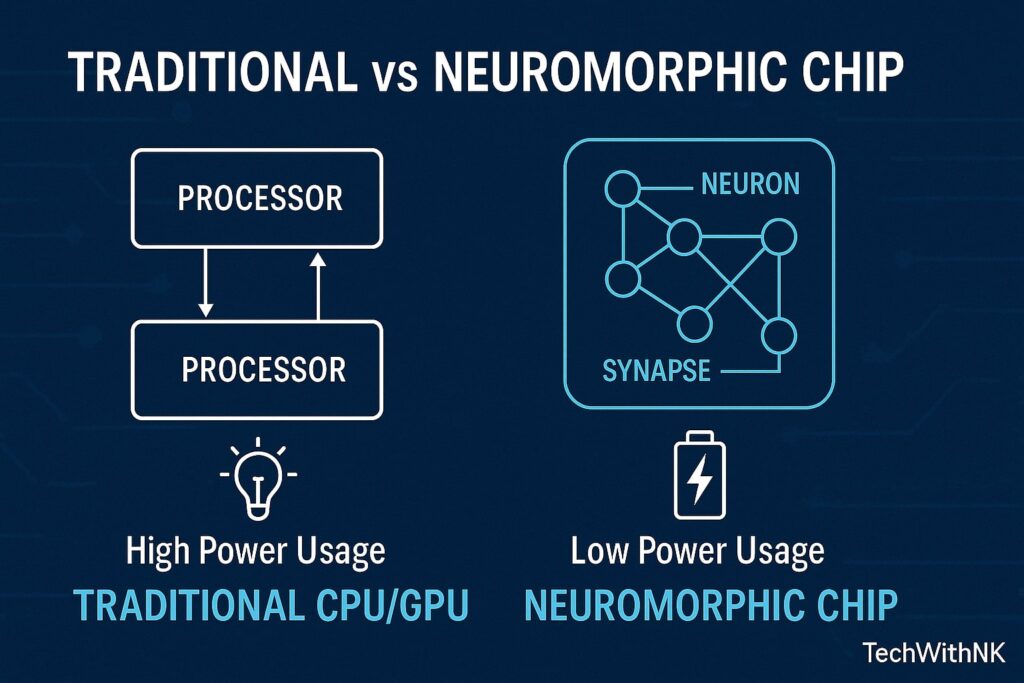

| Traditional Computer | Neuromorphic Computer |

|---|---|

| Sequential data processing | Parallel, event-driven processing |

| CPU + Memory separated | Integrated compute-memory architecture |

| High energy consumption | Ultra-low power consumption |

| Digital logic (bits) | Spiking neurons (analog-digital mix) |

⚙️ Biological Inspiration

Neuron: Computes information through spikes.

Synapse: Adjusts connection strength (learning).

Plasticity: Adapts based on experience (Hebbian learning).

Neuromorphic systems replicate these biological elements through electronic circuits, enabling adaptive and efficient intelligence.

⚡ 3. Why We Need Neuromorphic Chips

🌍 The Challenge with Current AI Hardware

Energy Hunger: Data centers running large AI models consume megawatts of power.

Latency: Cloud-based AI models can’t respond instantly in edge scenarios.

Data Bottlenecks: Transferring large datasets between memory and processor slows performance (the von Neumann bottleneck).

💡 The Neuromorphic Advantage

Energy-efficient: Operates at milliwatts, not watts.

Event-driven: Processes data only when needed.

Scalable: Handles complex networks with millions of neurons.

Edge-ready: Ideal for IoT, robotics, and autonomous devices.

🧠 “Neuromorphic chips could make AI run like a brain — fast, adaptive, and energy-conscious.”

🧱 4. How Neuromorphic Chips Work

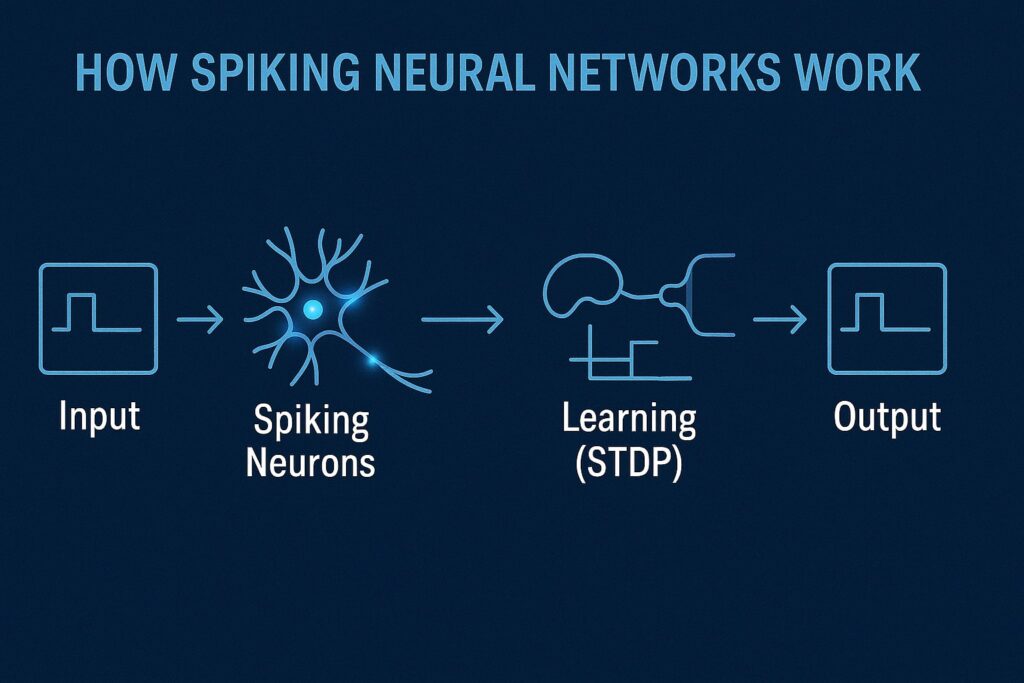

Neuromorphic systems use Spiking Neural Networks (SNNs) instead of traditional Artificial Neural Networks (ANNs).

🌀 Spiking Neural Networks (SNNs)

Neurons fire only when input crosses a threshold — creating spikes.

These spikes carry timing information (not just values), allowing temporal learning.

SNNs can process sensory data (vision, sound, touch) more naturally.

⚙️ Components of a Neuromorphic Chip

Artificial Neurons: Mimic biological firing behavior.

Synapses: Represent weights that change dynamically.

Learning Engine: Implements spike-based learning (STDP – Spike-Timing-Dependent Plasticity).

Memory Integration: Memory and processing occur in the same physical space.

🔄 Operation Flow

Input data converted to spikes

Neurons process spikes in parallel

Synaptic weights adapt through learning rules

Output is generated as spike trains

This allows massive parallelism and energy-efficient computation — similar to how the human brain operates.

🧠 5. Neuromorphic vs Traditional AI Chips

| Feature | GPU/TPU | Neuromorphic Chip |

|---|---|---|

| Architecture | Sequential | Event-driven (parallel) |

| Power Usage | High (watts) | Very low (milliwatts) |

| Learning Type | Backpropagation | On-chip Hebbian / STDP |

| Real-time Adaptation | Limited | Continuous and local |

| Best Use | Cloud AI Training | Edge and adaptive systems |

Neuromorphic chips represent the next evolution after GPUs and TPUs — shifting from brute-force computation to intelligent, organic processing.

🧰 6. Leading Neuromorphic Projects & Chips

🔷 Intel Loihi (Loihi 1 & Loihi 2)

Mimics 130,000 neurons and 130 million synapses.

Supports real-time learning with minimal power.

Applications: robotics, odor recognition, path planning.

🟣 IBM TrueNorth

1 million programmable neurons, 256 million synapses.

Consumes only 70 milliwatts — far lower than GPUs.

Used in pattern recognition and real-time vision.

🔶 BrainScaleS (Heidelberg University)

Analog-digital hybrid model for ultra-fast brain simulations.

10,000× faster processing compared to biological time.

🔵 SpiNNaker (University of Manchester)

One million ARM cores simulate a billion neurons.

Focus on large-scale neural modeling.

🧩 SynSense (China/Europe)

Commercial edge neuromorphic processors.

Used in smart vision sensors and autonomous drones.

🧠 7. How Neuromorphic Chips Learn: The Science of STDP

🔄 Spike-Timing-Dependent Plasticity (STDP)

STDP is the core learning rule of neuromorphic systems.

It strengthens or weakens synapses based on the timing difference between pre- and post-neuron spikes.

If presynaptic neuron fires before the postsynaptic neuron → strengthen connection.

If it fires after → weaken connection.

This timing-based adaptation allows neuromorphic systems to learn in real-time, without backpropagation or large labeled datasets.

📈 Example

A robot with neuromorphic sensors can learn to avoid obstacles by repeatedly interacting with its environment — self-learning without supervision.

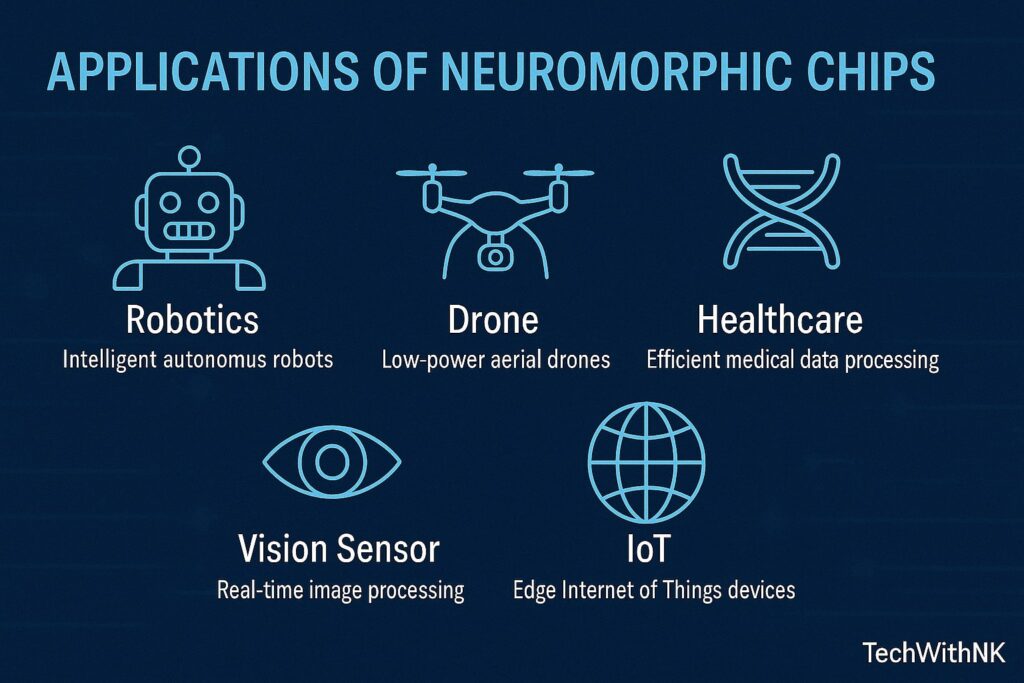

🚀 8. Real-World Applications

🤖 1. Edge AI & Robotics

Real-time decision-making with low power.

Drones and autonomous vehicles using Loihi-like chips can react faster than cloud AI.

🧩 2. Healthcare & Neural Prosthetics

Neuromorphic implants can interface with the nervous system.

Used in brain-machine interfaces and seizure detection.

📷 3. Vision Sensors

Event-based cameras (like Prophesee) process motion as spikes.

Ideal for high-speed object tracking and AR/VR.

🌐 4. IoT Devices

Smart sensors that only wake when detecting change — reducing data transmission.

🧠 5. Autonomous Systems

Neuromorphic chips allow adaptive behavior — e.g., robots learning to balance or navigate complex terrain.

⚙️ 9. Neuromorphic Hardware & Software Ecosystem

🧩 Hardware Examples

| Company | Chip | Key Feature |

|---|---|---|

| Intel | Loihi 2 | On-chip learning, scalable fabric |

| IBM | TrueNorth | Million-neuron architecture |

| SynSense | DYNAP-SE | Edge neuromorphic vision |

| BrainChip | Akida | Commercial neuromorphic edge chip |

🧩 Software Frameworks

| Platform | Purpose |

|---|---|

| Nengo | SNN modeling and simulation |

| SpiNNTools | SpiNNaker programming |

| Lava (Intel) | Open-source neuromorphic software |

| PyNN | Common interface for neuromorphic platforms |

These ecosystems allow researchers and developers to build, simulate, and deploy neuromorphic applications efficiently.

⚙️ 10. Comparison: ANN vs SNN (Neuromorphic Approach)

| Feature | ANN (Deep Learning) | SNN (Neuromorphic) |

|---|---|---|

| Data Type | Continuous | Discrete spikes |

| Power | High | Ultra-low |

| Learning | Offline, batch | Online, continuous |

| Temporal Processing | Limited | Natural and event-based |

| Hardware | GPU/TPU | Neuromorphic Chip |

SNNs bring us closer to biological realism, enabling systems that see, hear, and react like living organisms.

🔮 11. Future of Neuromorphic Computing

🌟 Key Research Directions

Hybrid Systems: Combining classical and neuromorphic architectures.

3D Integration: Stacking synaptic layers to mimic brain density.

AI + Neuroscience Fusion: Using brain studies to refine hardware models.

Quantum + Neuromorphic Computing: Merging quantum randomness with spiking logic.

🚀 Industry Forecast

By 2030, neuromorphic chips could become mainstream in:

Smartphones and wearables (context-aware computing)

Smart surveillance and drones

Adaptive industrial automation

Personalized healthcare monitoring

“Neuromorphic chips are not just faster AI chips — they are the beginning of electronic brains.”

⚡ 12. Challenges and Limitations

Programming Complexity: Training SNNs requires new paradigms.

Toolchain Maturity: Limited software and datasets.

Standardization Issues: Lack of universal benchmarks.

Scalability: True brain-level simulation still far away.

Yet, rapid progress in materials, algorithms, and fabrication is closing these gaps.

🌍 13. India’s Role and Research in Neuromorphic Computing

India is emerging as a key player in this field through:

IIT Bombay and IISc Bengaluru working on brain-inspired circuits.

CDAC and DRDO exploring neuromorphic defense systems.

Startups in Bengaluru and Hyderabad focusing on AI edge chips and sensor fusion.

With Make in India and Semiconductor Mission, the foundation for neuromorphic R&D is strong.

🧠 14. Neuromorphic vs Quantum vs Classical AI

| Feature | Neuromorphic | Quantum | Classical |

|---|---|---|---|

| Inspiration | Brain | Quantum Physics | Mathematics |

| Computation | Spikes (events) | Qubits (superposition) | Binary logic |

| Power | Ultra-efficient | High (cooling) | Moderate |

| Status | Emerging | Research stage | Mature |

| Best for | Adaptive edge AI | Optimization, cryptography | General AI workloads |

Each complements the other — neuromorphic for perception, quantum for reasoning, classical for control — forming a tri-layer future AI stack.

🏁 15. Conclusion: Toward Truly Intelligent Machines

The human brain remains the gold standard of intelligence — compact, efficient, and self-learning.

Neuromorphic chips are the closest attempt to replicate its essence in silicon.

They promise:

AI that learns continuously

Machines that sense context and emotion

Smart devices that think locally

As the world shifts from “data-driven AI” to “brain-inspired AI,” neuromorphic computing stands as the bridge between biology and technology — a new dawn for artificial intelligence.

🧠 The future of AI isn’t just faster — it’s smarter, organic, and inspired by the human brain.

What makes neuromorphic chips different from GPUs?

Neuromorphic chips use spiking neurons and event-driven processing, while GPUs rely on continuous matrix operations. Neuromorphic chips are far more energy-efficient.

Can neuromorphic chips replace CPUs or GPUs?

Not yet. They complement existing systems for specific low-power, adaptive tasks — especially at the edge.

What is an example of a neuromorphic chip?

Intel’s Loihi 2 and IBM’s TrueNorth are well-known examples used in research and robotics.